In the last two posts on Using AI to Extract Key Messages from Your Blog for Social Media – Part I and Part II, we explored how the power of AI can be helpful in converting your lengthy write-up into an effective summary. Let’s kick this up a notch.

Words are great, but as they say, a picture is worth 1000 words. So, what if we take this great summary and enhance it with captivating visuals to go along with the words. And then stitch the summarized words with matched visuals into a video accompanied with suitable audio? The richness that comes with a video combining visuals, audio and words is indisputable. But, how realistic is the way to accomplish this? Can this be done at scale? And can it be done automatically and seamlessly? Let’s dig deeper.

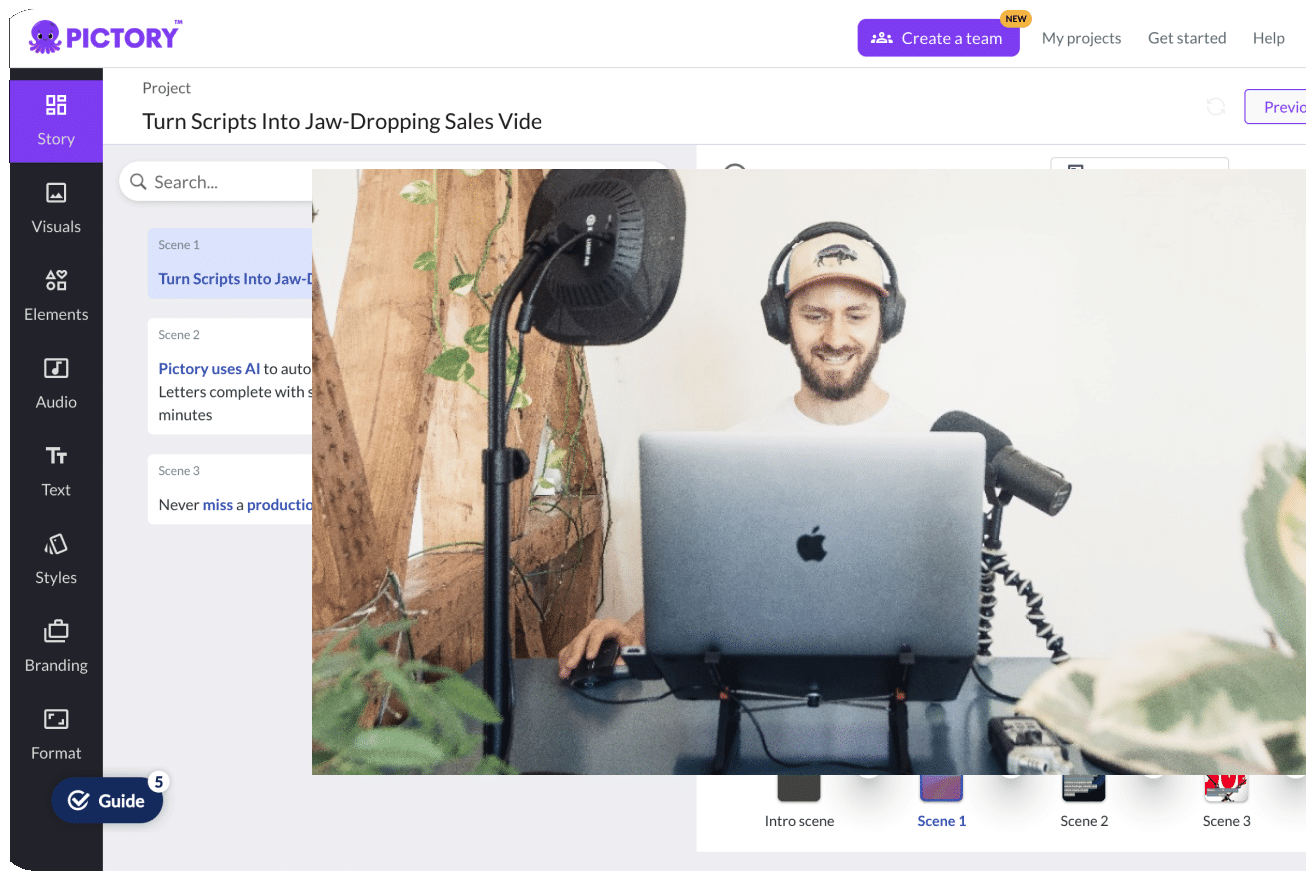

As you have seen in previous posts, Pictory will read the article and reveal to you a collection of sentences that each account for one scene in the future video.

Entity Recognition

Pictory begins this process by finding the most relevant keywords in the sentence. To find the keywords, Pictory focuses on the entities within the sentence. For this, Pictory uses an algorithm, where the input is the sentence, and the output is a set of entities present in the sentence. Sentences vary in length and the number of entities – the key is to find the entity that is the closest to the context of not only the stand-alone sentence, but also the entire article.

Words are great, but accompanying them with appropriate visuals can create magic

Adding Article Context

Suppose the sentence is “The corona virus spreads from bats.” In this sentence, the entities are “bats” and “corona virus” – the two nouns. Now the task is to find one visual that encompasses the core purpose of this sentence. So which entity would we select as the search keyword to find that one visual for – bats or the virus? This is where the context of the entire article comes into play. If the article’s key message is centered around the corona virus, we would need to select the “corona virus” entity to find the appropriate visual. On the other hand, if the focus of the article is to discuss different kinds of animals that spread various diseases, we would need to choose “bats” as the entity to find the suitable visual. This is exactly the thought process that Pictory’s AI goes through in order to choose an entity for each sentence from the list of entities extracted from each sentence.

Finding the Best Visuals

When finding a visual, Pictory has access to a plethora of visual choices from the millions of stock visuals (image and videos) available within media platforms such as ShutterStock, Pixabay, Storyblocks etc., that the tool utilizes. Choices are great, but the task is to find one best fit match. So, let’s say that one keyword for that sentence is “corona virus”. This will be the search term for the media content platforms. When the term “corona virus” is looked up, a range of visuals appear – ones with bats, ones with solely the virus, ones with human associated with the virus, etc. The task is now to decide which variation of the visual is best for the sentence.

Pictory calculates the cosine similarity between the vector of the description and the vector of the entire article in order to find the best visual suited to the article’s context.

Pictory again harnesses the power of vector mapping to do so. Let’s take a look at what this means. So, each of these stock images or videos has a particular description (tags) associated with it. This is a description of the content in the medium. Pictory extracts the descriptions associated with the top visuals that are a potential match to the keyword. Pictory calculates the cosine similarity between the vector of the description and the vector of the entire article in order to find the best visual suited to the article’s context. Cosine similarity is derived via a mathematical formula, which is applied to the vector. Every visual would have a cosine score, and Pictory then sorts the visuals based on the score and the highest match (1 being the best) visual is ultimately selected.

Customizing your choices

Now, while the process described above works most times, there are always cases out of the ordinary. What if the user isn’t happy with the visual the tool selects? What if the user wants to use a custom visual?

Pictory utilizes computer-vision based enhancement to tackle this. Suppose a user wants to alter the visual that Pictory has chosen for a particular scene. The user can either perform a manual search on Pictory, or can input their own image into the platform. In the case of the latter, Pictory utilizes computer vision to obtain keywords that describe components of the image. Thereafter, Pictory repeats the process detailed in the previous sections to obtain visuals from those keywords to reveal to the user, to ensure the user has several options to choose from if they desire to do so.

In other AI applications, the power of Machine Learning can also be utilized to take in specifications for image quality and/or size to further comb through via that lens. Systems can harness collaborative filtering, a method to help make predictions about user interests through collective preference data from several users, to further enhance the visual output. Not only can AI do this, but it can also create captivating visuals from scratch through Generative Adversarial Networks (GANs) to add a uniqueness factor to the visuals.

Summary In summary, using AI to find the best visuals for creating a video from an article involves the following steps:

(1) Find the best keywords/entities associated with the context of the sentence.(2) Use the keyword to find appropriate visual choices from available image platforms along with description for the visuals.(3) Select one visual based on the description mapping to the context of the entire article.