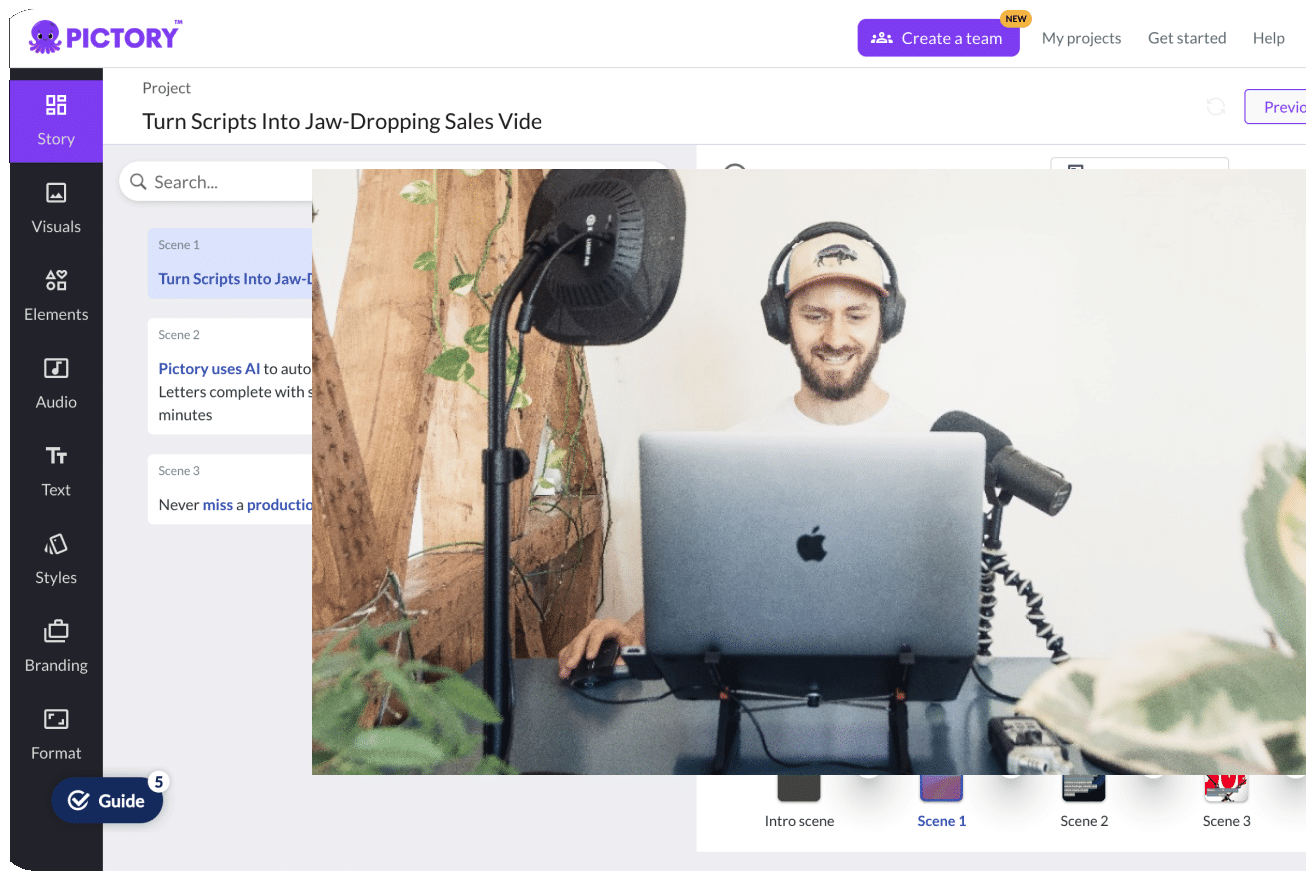

Artificial Intelligence (AI) enables the automation of our everyday lives and the professional world. This AI-driven automation allows you to play chess by yourself, Uber home in self-driven cars, and ask Alexa what the weather is like. It also allows software like Pictory to help you create and edit professional-quality videos without any required technical skills.

Although Artificial Intelligence is used to accelerate and automate many industrial and manufacturing processes which have greatly advanced our current technology, health, and economy, there are also negative ways in which AI is used that can be harmful and damaging to our general society. One of the ways in which AI has been “mis-used” is through deepfakes.

Deepfake technology can create fake videos, photos, and audios, swapping faces and voices in video and digital content to make realistic-looking fake media. As stated by Merriam-Webster, “Deep” refers to the underlying AI-based technology of “deep learning” which utilizes artificial neural networks with multiple layers of algorithms to synthesizing fake content.

Deepfakes emerge from media which has been altered and manipulated to misrepresent someone as doing or saying something that was not actually true. Deepfakes are often used to paste a celebrity face onto someone else’s body, or manipulate the celebrity’s video or voice to say or do something else.

This can be seen through Mark Zuckerberg talking about having total control over billions of people’s stolen data, Barack Obama cursing Trump out, and even AI-run social media profiles - all deepfakes, some with more political motive than others.

There are several different ways to create deepfakes, “but the most common relies on the use of deep neural networks involving autoencoders which employ a face-swapping technique”, according to Business Insider. Using pictures of two faces, these autoencoders are able to track the differences in features and characteristics and impose one person’s facial structure and mannerisms onto the other’s.

Business Insider shares that “the autoencoder is a deep learning AI program tasked with studying the video clips to understand what the person looks like from a variety of angles and environmental conditions”, using it to map this person onto the individual in the target video through common features.

Another method of creating deepfakes is through Generative Adversarial Networks (GANs), “which detects and improves any flaws in the deepfake within multiple rounds” and therefore makes it harder to be decoded by deepfake detectors, states Business Insider. GANs rely on studying large amounts of data to learn how to best imitate real media with very accurate results.

Deepfakes can also be easily created through mobile applications and softwares such as Zao, DeepFace Lab, FaceApp, and Face Swap which make deepfake creation much more accessible and available to the general public - and therefore more dangerous to the public as well.

Artificial Intelligence has been automated and therefore extremely accelerated this process of creating deepfakes.

Before automation, you would have had to individually and manually Photoshop or manipulate each image, video frame, and voice note which you want to edit into a deepfake. With Artificial Intelligence, this process happens automatically, efficiently, and speedily, allowing for high quality deepfakes to be manufactured at alarmingly high rates.

With increased accessibility and possibility for deepfake creation, especially by beginners, deepfakes have found their spot on the dark web. The first deepfake emerged in 2017 when a Reddit user posted doctorned pornographic clips on the site. According to The Guardian, “the videos swapped the faces of celebrities – Gal Gadot, Taylor Swift, Scarlett Johansson and others – on to porn performers.”

In fact, from the 15,000 deepfake videos that Deeptrace, an AI firm, found online in September 2019, “a staggering 96% were pornographic and 99% of those mapped faces from female celebrities on to porn stars”, states The Guardian. This shows how deepfake technology is actively being weaponised against women, reinforcing many sexist societal structures and systems which we currently live under.

Interestingly enough, not all deepfakes are bad - some are entertaining and helpful. According to The Guardian, “voice-cloning deepfakes can restore people’s voices when they lose them to disease”, and “deepfake videos can enliven galleries and museums”, as can be seen by the Dalí museum which has a deepfake of the surrealist painter who introduces his art and takes selfies with visitors.

In the entertainment industry, deepfake technology can be used to “improve the dubbing on foreign-language films, and more controversially, resurrect dead actors.” In these ways, deepfakes are being used to advance and automate art, making it more interesting and digestible to the public.

Apart from being used to manipulate pornographic clips and exploit women, as well as to advance art, deepfakes have recently become the Pentagon’s number one priority due to its horrifying ability to build distrust in our society. Because deepfake technology has been made so accessible to the general public, anyone with any political, economic, or personal motives can make an incriminating or slanderous video and post it on the internet.

The Guardian explains that “the more insidious impact of deepfakes, along with other synthetic media and fake news, is to create a zero-trust society, where people cannot, or no longer bother to, distinguish truth from falsehood”. Without this trust, it is much easier for society to raise doubts about specific events or facts.

When a deepfake is posted on the internet, it can be incredibly hard to spot that it is a fake video. Previously, you could tell a deepfake was not real because the person’s blinking in a deepfake video would look fake, due to the fact that the artificial intelligence algorithm is usually trained only on open eyes.

However, due to the nature of artificial intelligence and how quickly it can learn from its mistakes, this weakness was fixed almost as immediately as it was revealed. Deepfakes keep getting more and more accurate, real, and believable to anyone on the internet - and spotting them keep gettings harder.

“The problem may not be so much the faked reality as the fact that real reality becomes plausibly deniable,” says Prof Lilian Edwards, a leading expert in internet law at Newcastle University. This can be seen through politicians and public figures trying to cover up their truths or do damage-control on bad PR by denying that a certain video or photo of them is real.

For example, Donald Trump claimed that his misogynistic and demeaning comment about grabbing women by their genitals was a deepfake which was fabricated against him. Although there is very real footage and documentation of Trump’s sexist comment, Trump was still able to claim it as a deepfake and manipulate his followers into thinking that it was not real.

So if you cannot tell what is real or fake on the internet, how do we know what truth is? For over a century, video, audio, and photos have been our basis for truth.

CNN states that “not only have sound and images recorded our history, they have also informed and shaped our perception of reality.” If these videos, audios, and photos can be easily manipulated and therefore cannot be trusted, the way we perceive and experience the world completely changes.

We have been taught to learn from the sharing of media online across the world, and many people even rely on social media to educate themselves on world news and politics. “If deepfakes make people believe they can’t trust video, the problems of misinformation and conspiracy theories could get worse”, says CNN.

A distrustful society full of misinformation and conspiracy theories cannot advance or improve the current society we live in. People need to know the truth, and be able to find the truth without evaluating deepfakes, in order to make their own personal and political decisions.

The solution, as always and ironically, is Artificial Intelligence. AI algorithms are being trained through real and deepfake videos to spot negative deepfakes on the internet and take them down.

As more deepfakes are discovered, this algorithm will keep improving, hopefully eventually getting to a point where all harmful deepfakes can be removed from the internet.

With automation comes automatic democratization. Artificial Intelligence has made deepfake creation available to anyone, giving people the power to make potentially harmful content. Similarly, Pictory automates, and therefore also democratizes, video creation and production, allowing anyone to create and edit their own video.

This goes to show the immense boundaries for Artificial Intelligence - AI algorithms can be trained on any data and therefore can be created for positive or malicious purposes.

All this power comes down to you - will you use artificial intelligence to help automate and advance our society, or will you use it to create damaging deepfakes which instate distrust in our government and society? The choice is yours.

text here...